A Primer on Repeatable Random Numbers

If you're creating anything procedural, you're almost guaranteed to come in need of random numbers at one point. And if you want to be able to produce the same result more than once, you'll need the random numbers to be repeatable.

In this article we'll use level/world generation in games as example use cases, but the lessons are applicable to many other things, such as procedural textures, models, music, etc. They are however not meant for applications with very strict requirements, such as cryptography.

Why would you want to repeat the same result more than once?

- Ability to revisit the same level/world. For example a certain level/world can be created from a specific seed. If the same seed is used again, you will get the same level/world again. You can for example do this in Minecraft.

- Persistent world that's generated on the fly. If you have a world that's generated on the fly as the player moves around in it, you may want locations to remain the same the first and subsequent times the player visit those locations (like in Minecraft, the upcoming game No Man's Sky, and others), rather than being different each time as if driven by dream logic.

- Same world for everyone. Maybe you want your game world to be the same for everyone who play it, exactly as if it wasn't procedurally generated. This is for example the case in No Man's Sky. This is essentially the same as the ability to revisit the same level/world mentioned above, except that the same seed is always used.

We've mentioned the word seed a few times. A seed can be a number, text string, or other data that's used as input in order to get a random output. The defining trait for a seed is that the same seed will always produce the same output, but even the slightest change in the seed can produce a completely different output.

In this article we'll look into two different ways to produce random numbers - random number generators and random hash functions - and reasons for using one or the other. The things I know about this are hard earned and don't seem to be readily available elsewhere, so I thought it would be in order to write it down and share it.

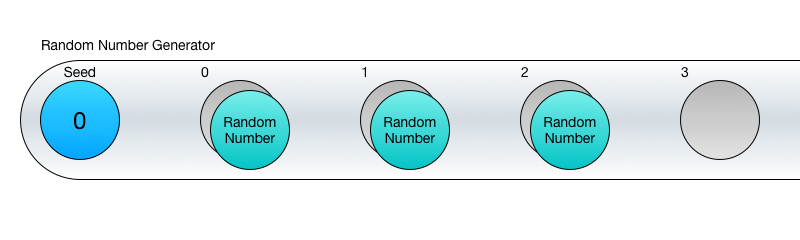

Random number generators

The most common way to produce random numbers is using a random number generator (or RNG for short). Many programming languages have RNG classes or methods included, and they have the word "random" in their name, so it's the obvious go-to approach to get started with random numbers.

A random number generator produces a sequence of random numbers based on an initial seed. In object-oriented languages, a random number generator is typically an object that is initialized with a seed. A method on that object can then be repeatedly called to produce random numbers.

The code in C# could look like this:

Random randomSequence = new Random(12345);

int randomNumber1 = randomSequence.Next();

int randomNumber2 = randomSequence.Next();

int randomNumber3 = randomSequence.Next();In this case we're getting random integer values between 0 and the maximum possible integer value (2147483647), but it's trivial to convert this to a random integer in a specific range, or a random floating point number between 0 and 1 or similar. Often methods are provided that do this out of the box.

Here's an image with the first 65536 numbers generated by the Random class in C# from the seed 0. Each random number is represented as a pixel with a brightness between 0 (black) and 1 (white).

It's important to understand here that you cannot get the third random number without first getting the first and second one. This is not just an oversight in the implementation. In its very nature, an RNG generates each random number using the previous random number as part of the calculation. Hence we talk about a random sequence.

This means that RNGs are great if you need a bunch of random numbers one after the other, but if you need to be able to get a specific random number (say, the 26th random number from the sequence), then you're out of luck. Well, you could call Next() 26 times and use the last number but this is a very bad idea.

Why would I want a specific random number from the sequence?

If you generate everything at once, you probably don't need specific random numbers from a sequence, or at least I can't think of a reason. However, if you generate things bit by bit on the fly, then you do.

For example, say you have three sections in your world: A, B, and C. The player starts in section A, so section A is generated using 100 random numbers. Then the player proceeds to section B which is generated using 100 different numbers. The generated section A is destroyed at the same time to free up memory. The player proceeds to section C which is 100 yet different numbers and section B is destroyed.

However, if the player now go back to section B again, it should be generated with the same 100 random numbers as it was the first time in order for the section to look the same.

Can't I just use random number generators with different seed values?

No! This is a very common misconception about RNGs. The fact is that while the different numbers in the same sequence are random in relation to each other, the same indexed numbers from different sequences are not random in relation to each other, even if it may look like it at first glance.

So if you have 100 sequences and take the first number from each, those numbers will not be random in relation to each other. And it won't be any better if you take the 10th, 100th, 1000th number from each sequence.

At this point some people will be skeptical, and that's fine. You can also look at this Stack Overflow question about RNG for procedural content if that's more trustworthy. But for something a bit more fun and informative, let's do some experiments and look at the results.

Let's look at the numbers generated from the same sequence for reference and compare with numbers created by getting the first number in of each of 65536 sequences created from the seeds 0 to 65535.

Though the pattern is rather uniformly distributed, it isn't quite random. In fact, I've shown the output of a purely linear function for comparison, and it's apparent that using numbers from subsequent seeds is barely any better than just using a linear function.

Still, is it almost random though? Is it good enough?

At this point it can be a good idea to introduce better ways to measure randomness since the naked eye is not very reliable. Why not? Isn't it enough that the output looks random enough?

Well yes, in the end our goal is simply that things look sufficiently random. But the random number output can look very different depending on how it's used. Your generation algorithms may transform the random values in all kinds of ways that will reveal clear patterns that are hidden when just inspecting the values listed in a simple sequence.

An alternative way to inspect the random output is to create 2D coordinates from pairs of the random numbers and plot those coordinates into an image. The more coordinates land on the same pixel, the brighter that pixel gets.

Let's take a look at such a coordinate plot for both a random numbers in the same sequence and for random numbers created from individual sequences with different seeds. Oh and let's throw in the linear function too.

Perhaps surprisingly, when creating coordinates from random numbers from different seeds, the coordinates are all plotted into thin lines rather than being anything near uniformly distributed. This is again just like for a linear function.

Imagine you created coordinates from random numbers in order to plant trees on a terrain. Now all your trees would be planted in a straight line with the remaining terrain being empty!

We can conclude that random number generators are only useful if you don't need to access the numbers in a specific order. If you do, then you might want to look into random hash functions.

Random hash functions

In general a hash function is any function that can be used to map data of arbitrary size to data of fixed size, with slight differences in input data producing very big differences in output data.

For procedural generation, typical use cases are to provide one or more integer numbers as input and get a random number as output. For example, for large worlds where only parts are generated at a time, a typical need is to get a random number associated with an input vector (such as a location in the world), and this random number should always be the same given the same input. Unlike random number generators (RNGs) there is no sequence - you can get the random numbers in any order you like.

The code in C# could look like this - note that you can get the numbers in any order you like:

RandomHash randomHashObject = new RandomHash(12345);

int randomNumber2 = randomHashObject.GetHash(2);

int randomNumber3 = randomHashObject.GetHash(3);

int randomNumber1 = randomHashObject.GetHash(1);The hash function may also take multiple inputs, which mean you can get a random number for a given 2D or 3D coordinate:

RandomHash randomHashObject = new RandomHash(12345);

randomNumberGrid[20, 40] = randomHashObject.GetHash(20, 40);

randomNumberGrid[21, 40] = randomHashObject.GetHash(21, 40);

randomNumberGrid[20, 41] = randomHashObject.GetHash(20, 41);

randomNumberGrid[21, 41] = randomHashObject.GetHash(21, 41);Procedural generation is not the typical use of hash functions, and not all hash functions are well suited for procedural generation, as they may either not have sufficiently random distribution, or be unnecessarily expensive.

One use of hash functions is as part of the implementation of data structures such as dictionaries. These are often fast but not random at all, since they are not meant for randomness but just for making algorithms perform efficiently.

Another use of hash function is for cryptography. These are often very random, but are also slow, since the requirements for cryptographically strong hash functions is much higher than for values that just looks random.

Our goal for procedural generation purposes is a random hash function that looks random but is also efficient, meaning that it's not slower than it needs to be. Chances are there's not a suitable one built into your programming language of choice, and that you'll need to find one to include in your project.

I've tested a few different hash functions based on recommendations and information from various corners of the Internet. I've selected three of those for comparison here.

- PcgHash: I got this hash function from Adam Smith in a discussion on Google Groups forum on Procedural Content Generation. Adam proposed that with a little skill, it's not too hard to create your own random hash function and offered his PcgHash code snippet as an example.

- MD5: This may be one of the most well-known hash functions. It's also of cryptographic strength and more expensive than it needs to be for our purposes. On top of that, we typically just need a 32-bit int as return value, while MD5 returns a much larger hash value, most of which we'd just be throwing away. Nevertheless it's worth including for comparison.

- xxHash: This is a high-performing modern non-cryptographic hash function that has both very nice random properties and great performance.

Apart from generating the noise sequence images and coordinate plots, I've also tested with a randomness testing suite called ENT - A Pseudorandom Number Sequence Test Program. I've included select ENT stats in the images as well as a stat I devised myself with I call the Diagonals Deviation. The latter looks at sums of diagonal lines of pixels from the coordinate plot and measures the standard deviation of these sums.

Here's the results from the three hash functions:

PcgHash stands out in that while it appears very random in the noise images of sequential random values, the coordinate plot reveals clear patterns, which means it doesn't hold up well to simple transformations. I conclude from this that rolling your own random hash function is hard and should probably be left to the experts.

MD5 and xxHash seem to have very comparable random properties, and out of those, xxHash is around 50 times faster.

xxHash also has the advantage that although it's not an RNG, it still has the concept of a seed, which is not the case for all hash functions. Being able to specify a seed has clear advantages for procedural generation, since you can use different seeds for different random properties of entities, grid cells, or similar, and then just use the entity index / cell coordinate as input for the hash function as-is. Crucially, with xxHash, the numbers from differently seeded sequences are random in relation to each other (see Appendix 2 for more details).

Hash implementations optimized for procedural generation

In my investigations of hash functions it has become clear that while it's good to choose a hash function that's high-performing in general-purpose hash benchmarks, it's crucial for performance to optimize it to procedural generation needs rather than just using the hash function as-is.

There are two important optimizations:

- Avoid conversions between integers and bytes. Most general-purpose hash functions take a byte array as input and return an integer or some bytes as the hash value. However, some of the high-performing ones convert the input bytes to integers since they operate on integers internally. Since it's most common for procedural generation to get a hash based on integer input values, the conversion to bytes is completely pointless. Refactoring the reliance on bytes away can triple the performance while leaving the output 100% identical.

- Implement no-loop methods that take just one or a few inputs. Most general-purpose hash functions take input data of variable length in the form of an array. This is useful for procedural generation too, but the most common uses are probably to get a hash based on just 1, 2 or 3 input integers. Creating optimized methods that take a fixed number of integers rather than an array can eliminate the need for a loop in the hash function, and this can dramatically improve the performance (by around 4x-5x in my tests). I'm not an expert on low level optimization, but the dramatic difference could be caused by either implicit branching in the for loop or by the need to allocate an array.

My current recommendation for a hash function is to use an implementation of xxHash that's optimized for procedural generation. See Appendix C for details on why.

You can get my implementations of xxHash and other hash functions on sourcehut. They are written in C# but shouldn't be too hard to port to other languages.

Besides the optimizations I also added extra methods to get the output as an integer number in a specified range or as a floating point number in a specified range, which are typical needs in procedural generation.

Note: At the time of writing I only added a single integer input optimization to xxHash and MurmurHash3. I'll add optimized overloads for two and three integer inputs too when I get time.

Combining hash functions and RNGs

Random hash functions and random number generators can also be combined. A sensible approach is to use random number generators with different seeds, but where the seeds have been passed through a random hash function rather than being used directly.

Imagine you have a large maze world, possibly nearly infinite. The world has a large scale grid where each grid cell is a maze. As the player moves around in the world, mazes are generated in the grid cells surrounding the player.

In this case you'll want each maze to always be generated the same way every time it's visited, so the random numbers needed for that need to be able to be produced independently from previously generated numbers.

However, mazes are always generated one whole maze at a time, so there's no need to have control over the order of the individual random numbers used for one maze.

The ideal approach here is to use a random hash function to create a seed for a maze based on the coordinate of the grid cell of the maze, and then use this seed for a random number generator sequence of random numbers.

The C# code could look like this:

RandomHash randomHashObject = new RandomHash(12345);

int mazeSeed = randomHashObject.GetHash(cellCoord.x, cellCoord.y);

Random randomSequence = new Random(mazeSeed);

int randomNumber1 = randomSequence.Next();

int randomNumber2 = randomSequence.Next();

int randomNumber3 = randomSequence.Next();Conclusions

If you need control over the order of querying random numbers, use a suitable random hash function (such as xxHash) in an implementation that's optimized for procedural generation.

If you just need to get a bunch of random numbers and the order doesn't matter, the simplest way is to use a random number generator such as the System.Random class in C#. In order for all the numbers to be random in relation to each other, either only a single sequence (initialized with one seed) should be used, or if multiple seeds are used they should be passed through a random hash function (such as xxHash) first.

The source code for the random numbers testing framework referred to in this article, as well as a variety of RNGs and hash functions, is available on sourcehut.

Appendix A: A note on continuous noise

For certain things you'll want to be able to query noise values that are continuous, meaning that input values near each other produce output values that are also near each other. Typical uses are for terrains or textures.

These requirements are completely different from the ones discussed in this article. For continuous noise, look into Perlin Noise - or better -

Simplex Noise.

However, be aware that these are only suitable for continuous noise. Querying continuous noise functions just to get random numbers unrelated to other random numbers will produce poor results since it's not what these algorithms are optimized for. For example, I've found that querying a Simplex Noise function at integer positions will return 0 for every third input!

Additionally, continuous noise functions usually use floating point numbers in their calculations, which have worse stability and precision the further you get from the origin.

Appendix B: More test results for seed and input values

I've heard various misconceptions over the years and I'll try to address a few more of them here.

Isn't it best to use a large number for the seed?

No, I haven't seen anything that indicates that. If you look at the test images throughout this article, there's no difference between the results for low or high seed values.

Don't random number generators take a few numbers to "get going"?

No. Again, if you look at the test images, you can see that the sequences of random values follow the same pattern from start (upper left corner and proceeding one line after the other) to end.

In the image below I've tested the 0th number in 65535 sequences as well as the 100th number in those same sequences. As can be seen, there's no apparent significant difference in the (lack of) quality of the randomness.

Doesn't some RNGs, such as Java's, have better randomness between numbers from differently seeded sequences?

Maybe a tiny bit better, but not nearly enough. Unlike the Random class in C#, the Random class in Java doesn't use the provided seed as-is, but shuffles the bits a bit before storing the seed.

The resulting numbers from different sequences may be a tiny bit more random looking, and we can see from the test stats that the Serial Correlation is much better. However, it's clear in the coordinates plot that the numbers still collapse to a single line when used for coordinates.

That said, there's no reason why a RNG couldn't apply a high-quality random hash function to the seed before using it. In fact it seems like a very good idea to do so, with no downsides I can think of. It's just that popular RNG implementations that I'm aware of don't do that, so you'll have to do it yourself as described previously.

How come it's fine to use different seeds for random hash functions when it isn't for RNGs?

There's no intrinsic reason, but hash functions such as xxHash and MurmurHash3 treat the seed value similar to the inputs, meaning that it essentially applies a high quality random hash function to the seed, so to speak. Because it's implemented that way, it's safe to use the Nth number from differently seeded random hash objects.

Appendix C: Comparison of more hash functions

In the original version of this article I compared PcgHash, MD5, and MurmurHash3 and recommended using MurmurHash3.

MurmurHash3 has excellent randomness properties and very decent speed. The author implemented it in parallel with a framework for testing hash functions called SMHasher which has become a widely used framework for that purpose.

I also looked at this Stack Overflow question about good hash functions for uniqueness and speed which compares a lot more hash functions and seems to paint an equally favorable picture of MurmurHash.

After publishing the article I got recommendations from Aras Pranckevičius to look into xxHash and from Nathan Reed to look into Wang Hash which he's written about here.

xxHash is a hash function which apparently beats MurmurHash on its own turf by scoring as high on quality in the SMHasher testing framework while having significantly better performance. Read more about xxHash on its Google Code page.

In my initial implementation of it, after I had removed byte conversions, it was slighter faster than MurmurHash3, though not nearly as much faster as shown in the SMHasher results.

I also implemented WangHash. The quality proved to be insufficient since it showed clear patterns in the coordinate plot, but it was over five times faster than xxHash. I tried implementing a "WangDoubleHash" where its result is fed to itself, and that had fine quality in my tests while still being over three times faster than xxHash.

However, since WangHash (and WangDoubleHash) takes only a single integer as input, it struck me that I should implement single input versions of xxHash and MurmurHash3 as well to see if that might improve performance. And it turned out to improve performance dramatically (around 4-5 times faster). So much in fact that xxHash was now faster than WangDoubleHash.

As for quality, my own test framework reveals fairly obvious flaws, but is not nearly as sophisticated as the SMHasher test framework, so a hash function that scores high there can be assumed to be a better seal of quality for randomness properties than just looking fine in my own tests. In general I would say that passing the tests in my test framework may be sufficient for procedural generation purposes, but since xxHash (in its optimized version) is the fastest hash function passing my own tests anyway, it's a no-brainer to just use that.

You can get my implementations of xxHash and other hash functions on sourcehut. They are written in C# but shouldn't be too hard to port to other languages.