For a while, my focus for my Vive VR game Eye of the Temple have been to not expand more on gameplay right now but rather on improving what I've got in order to make it as presentable as possible.

That has meant:

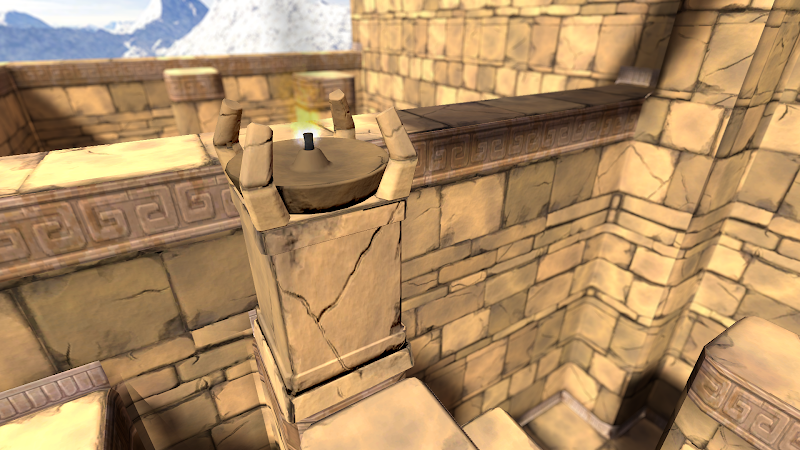

Stone torch model. You light these with your torch to trigger things happening:

Stone torch model. You light these with your torch to trigger things happening:

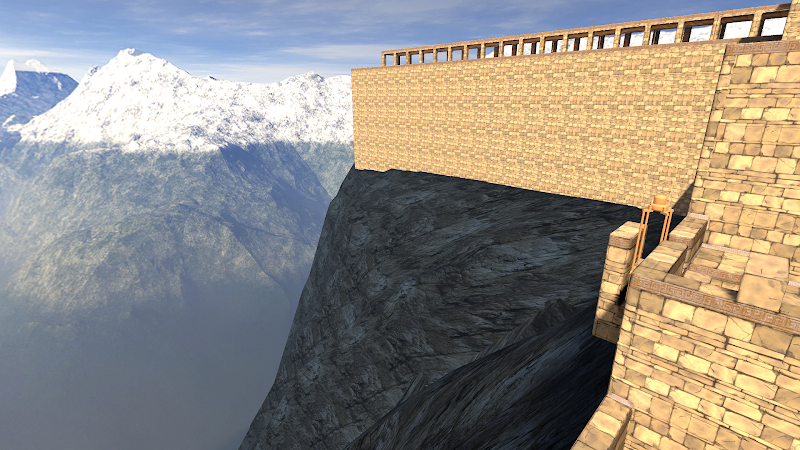

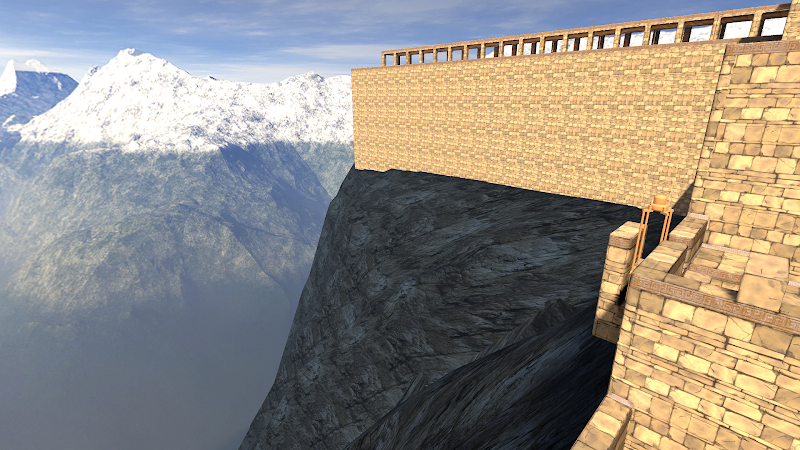

Cliffs model. The temple used to just float in the air; now it's grounded:

Cliffs model. The temple used to just float in the air; now it's grounded:

For a long time the game was full of placeholder models made of simple boxes and cylinders. There's still some of those left, but I've been working on replacing them all with proper models.

After briefly planning to work with contractors for 3D models, I decided to learn 3D modeling myself instead (and deal with the various challenges that come with it).

The models I need have highly specific requirements (they need to have very exact measurements and functionality to fit into the systems of the game) yet in the end they are quite simple models (man-made objects with no rigging).

With this combination it turned out that back-and-forth communication even with a very skilled artist took as much time as just doing the work myself. I'll still be working with artists for the game, just not for the simple 3d models I need.

Several of the models still have placeholder texturing. I have an idea for a good texture creation workflow for them, but it will take a little while to establish, so I'm postponing that while there's more pressing issues.

This has largely been a success. Gamers or not, I normally just let people play without instructions, and they figure things out. My dad completed the whole thing in one hour-long session when he was visiting.

The game did throw people in at the deep end though, asking them right from the start to step between moving platforms four meters above the ground. Some people would hesitate enough to end up mis-timing their step and stumble, making the experience even more extreme right from the beginning.

To ease people a bit more in, I've worked on an intro section that starts out with only a 0.75 meter drop, and the first two platforms have no timing requirement. I have yet to get wide testing of this to see if it helps.

There is one particular problem I've toiled with for a while, which is to design a platform that bridges two spots in a compact manner. Why this is tricky relates to how the game lets you explore a large virtual space using just a small physical space.

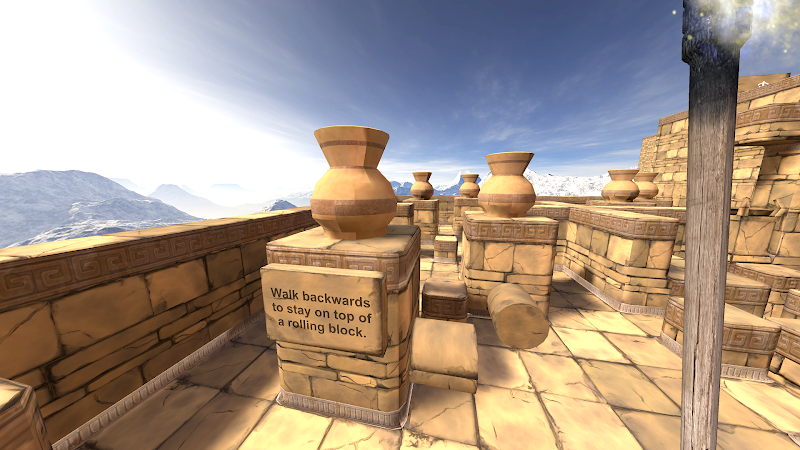

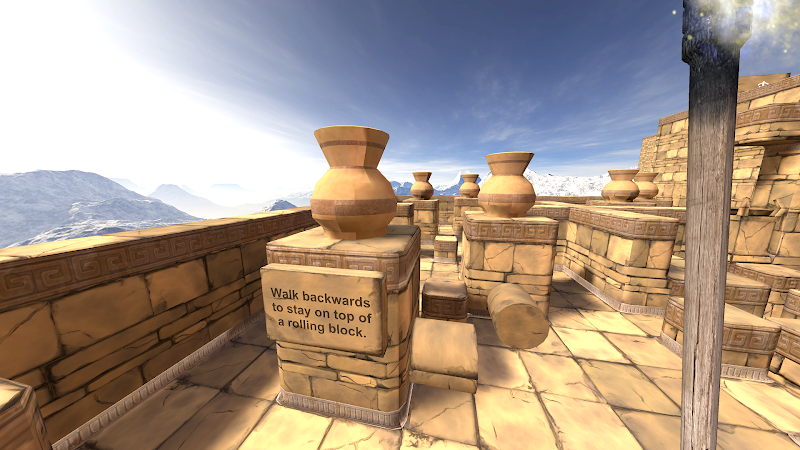

Originally I had platforms rotating around a center axis, but that made some people motion sick who otherwise didn't have problems with the rest of the game. I tried various contraptions to replace it, but they were complicated and awkward to use. My latest idea is using just a barrel-like rolling block, which is nice in its simplicity, and also a fun little gimmick to balance on once you understand how to use it.

I tried various contraptions to replace it, but they were complicated and awkward to use. My latest idea is using just a barrel-like rolling block, which is nice in its simplicity, and also a fun little gimmick to balance on once you understand how to use it.

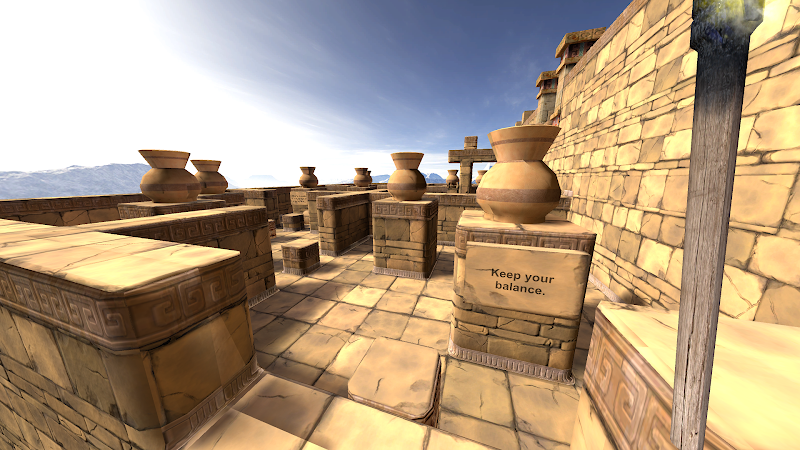

Figuring out what you're meant to do is easy to miss though, as I found out with the first tester trying it. I have some ideas for a subtle way to teach it, but that will take quite some time to implement. For now I settled for slapping a sign up that explains it.

In order to try to get faster feedback and shorter iteration cycles, I've now opened up for people to sign up online to be early testers of the game. If you have access to a Vive and would like to try out the game and provide detailed feedback based on your experience, please don't hesitate to join!

Sign up to provide feedback on early builds of Eye of the Temple

Read More »

That has meant:

- Improving visuals.

- Addressing usability issues found in play-testing.

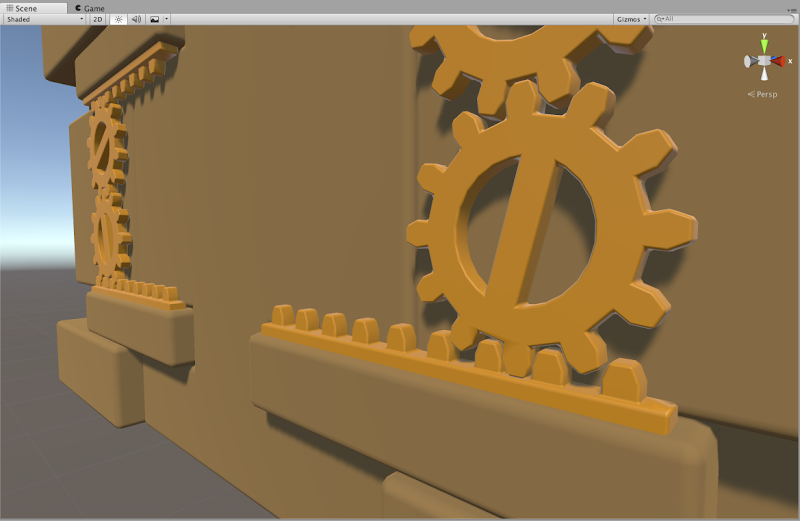

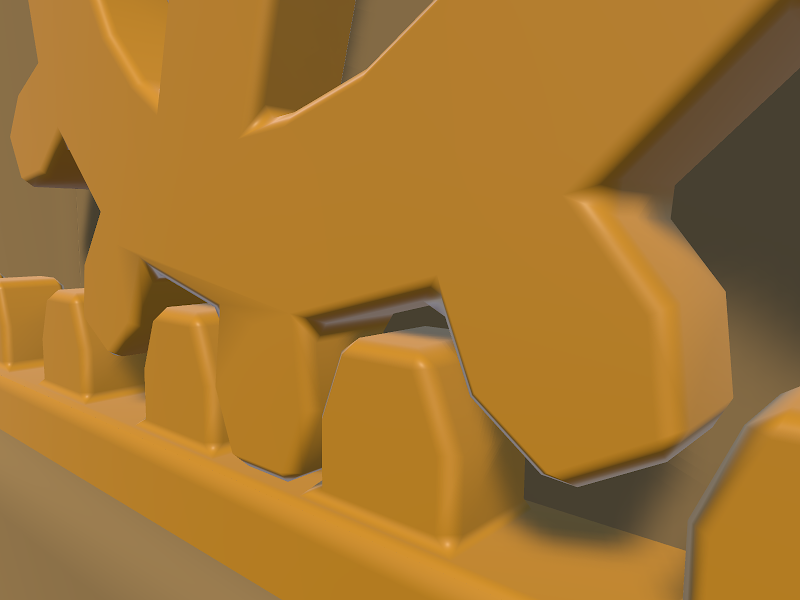

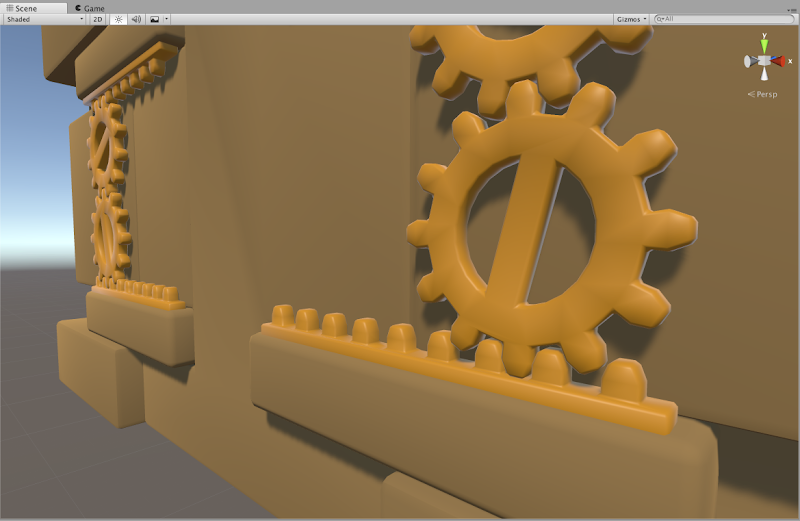

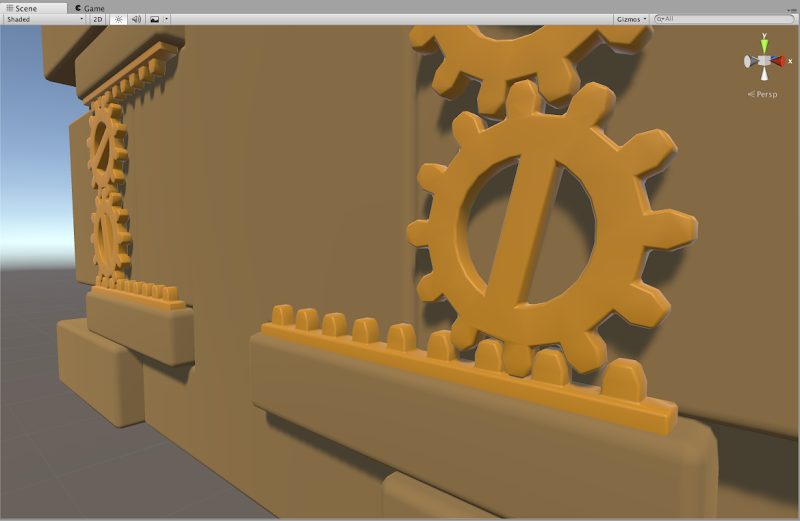

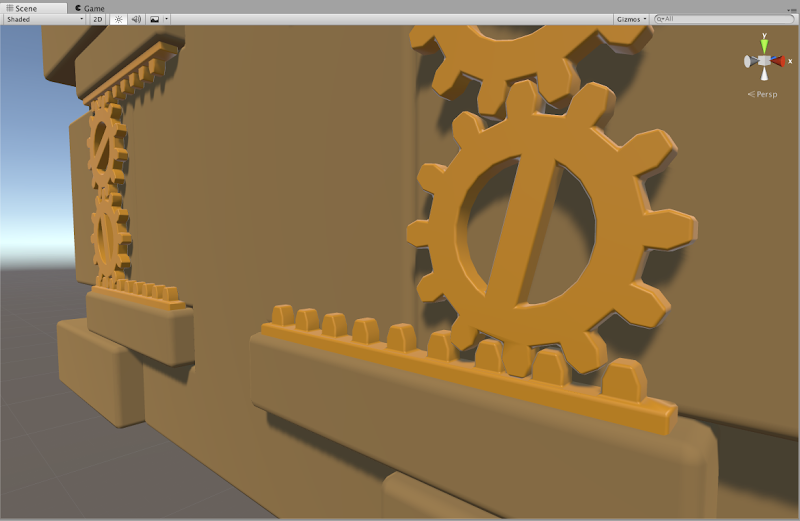

3D models

Gate model. Two keys must be inserted above the gate to unlock and open it: Stone torch model. You light these with your torch to trigger things happening:

Stone torch model. You light these with your torch to trigger things happening:

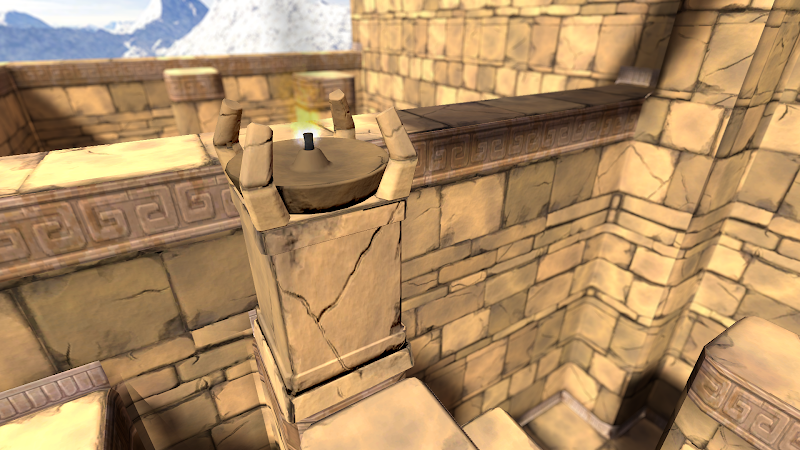

Cliffs model. The temple used to just float in the air; now it's grounded:

Cliffs model. The temple used to just float in the air; now it's grounded:

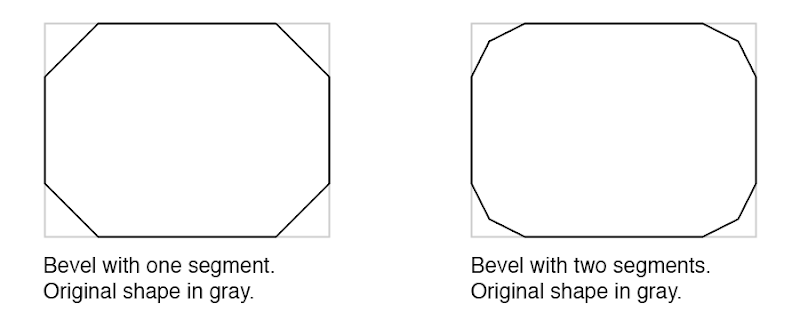

For a long time the game was full of placeholder models made of simple boxes and cylinders. There's still some of those left, but I've been working on replacing them all with proper models.

After briefly planning to work with contractors for 3D models, I decided to learn 3D modeling myself instead (and deal with the various challenges that come with it).

The models I need have highly specific requirements (they need to have very exact measurements and functionality to fit into the systems of the game) yet in the end they are quite simple models (man-made objects with no rigging).

With this combination it turned out that back-and-forth communication even with a very skilled artist took as much time as just doing the work myself. I'll still be working with artists for the game, just not for the simple 3d models I need.

Several of the models still have placeholder texturing. I have an idea for a good texture creation workflow for them, but it will take a little while to establish, so I'm postponing that while there's more pressing issues.

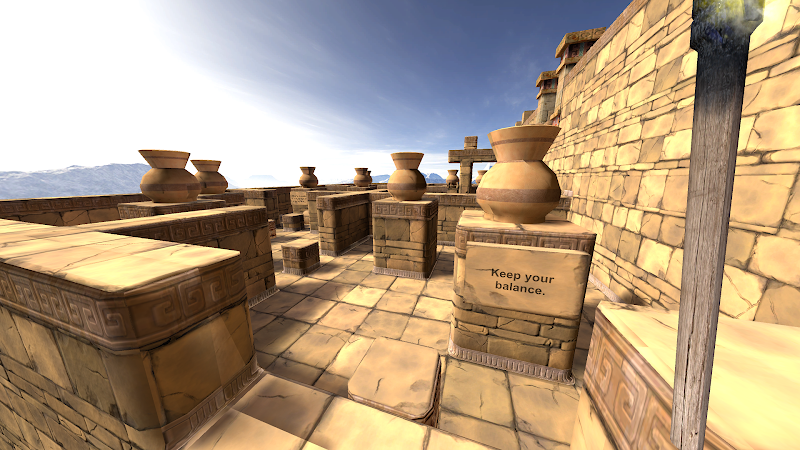

Intro section

My goal is that Eye of the Temple should be a rather accessible game. You need a body able to walk and crouch, and not be too afraid of heights, but I want it simple enough to play that people who don't normally play computer games can get into it without problems.This has largely been a success. Gamers or not, I normally just let people play without instructions, and they figure things out. My dad completed the whole thing in one hour-long session when he was visiting.

The game did throw people in at the deep end though, asking them right from the start to step between moving platforms four meters above the ground. Some people would hesitate enough to end up mis-timing their step and stumble, making the experience even more extreme right from the beginning.

To ease people a bit more in, I've worked on an intro section that starts out with only a 0.75 meter drop, and the first two platforms have no timing requirement. I have yet to get wide testing of this to see if it helps.

There is one particular problem I've toiled with for a while, which is to design a platform that bridges two spots in a compact manner. Why this is tricky relates to how the game lets you explore a large virtual space using just a small physical space.

Originally I had platforms rotating around a center axis, but that made some people motion sick who otherwise didn't have problems with the rest of the game.

I tried various contraptions to replace it, but they were complicated and awkward to use. My latest idea is using just a barrel-like rolling block, which is nice in its simplicity, and also a fun little gimmick to balance on once you understand how to use it.

I tried various contraptions to replace it, but they were complicated and awkward to use. My latest idea is using just a barrel-like rolling block, which is nice in its simplicity, and also a fun little gimmick to balance on once you understand how to use it.Figuring out what you're meant to do is easy to miss though, as I found out with the first tester trying it. I have some ideas for a subtle way to teach it, but that will take quite some time to implement. For now I settled for slapping a sign up that explains it.

Early testers online forum

There is no substitute for directly observing people playing a game, but this is impractical for me to do frequently when I also have a full-time job. I'm lucky if I get to do it two times a month.In order to try to get faster feedback and shorter iteration cycles, I've now opened up for people to sign up online to be early testers of the game. If you have access to a Vive and would like to try out the game and provide detailed feedback based on your experience, please don't hesitate to join!

Sign up to provide feedback on early builds of Eye of the Temple