February update: Gems

Here's the latest updates on the development of my Vive VR game Eye of the Temple.

New features:

- There are now gems throughout the temple that you can collect.

- Moving platforms have glowing symbols on them.

- Visuals: Intro area has some red stones and some of the dungeons have grittier gray stones and spikes.

- The way the platforms move has been tweaked, hopefully to further reduce potential for dizziness.

Notes on gems

The gems are found throughout the temple. The exact placement tries to take player proportions into account so that they are at a comfortable distance for reaching. I haven't tested this on different people yet though. If you could let me know how it works for you and how tall you are, that would be helpful. If you don't want to share that, that's ok too.

Right now the gems don't do anything yet. Later I will implement at the minimum a way for you to see how many you collected.

Beyond that I need to decide if the gems have a critical or non-critical function:

A critical function of the gems could be if they are used to unlock new areas in the game and thus are needed to progress. Or an almost-critical function would be to unlock alternative paths or secret rooms not otherwise accessible. This is still fairly critical because it would be annoying if you're trying to see 100% content of a game to find out you can't due to some mistake made earlier that's too late to do anything about. Currently there are one-way platforms that you can take which will prevent you from going back to collect any gems you might have missed. If I make the gems critical, I'd have to find a way to make it possible to always go back to all areas of the temple.

Non-critical functions of the gems could be high-score, achievements, and, I dunno, unlockable hats if I get a selfie stick implemented for the game. :P Old games would typically grant you extra lives, but it doesn't work for modern games with infinite lives.

For now I refrained from placing gems at platforms that only go one way. If there were gems there and you failed to pick one up, you wouldn't have a second chance and I thought that might feel unfair or frustrating.

Early testers online forum

In order to try to get faster feedback and shorter iteration cycles, I opened up for people to sign up online to be early testers of the game. If you have access to a Vive (and 2.2 by 2.2 meters space) and would like to try out the game and provide detailed feedback based on your experience, please don't hesitate to join!

Sign up to provide feedback on early builds of Eye of the Temple

January update: Visuals, usability and early testing

For a while, my focus for my Vive VR game Eye of the Temple have been to not expand more on gameplay right now but rather on improving what I've got in order to make it as presentable as possible.

That has meant:

- Improving visuals.

- Addressing usability issues found in play-testing.

(If anybody wonder what happened to the Whip Arena spin-off game, I put that on hold after it become clear it only worked well with a quite large physical VR space, which very few people have available.)

3D models

Gate model. Two keys must be inserted above the gate to unlock and open it:

Stone torch model. You light these with your torch to trigger things happening:

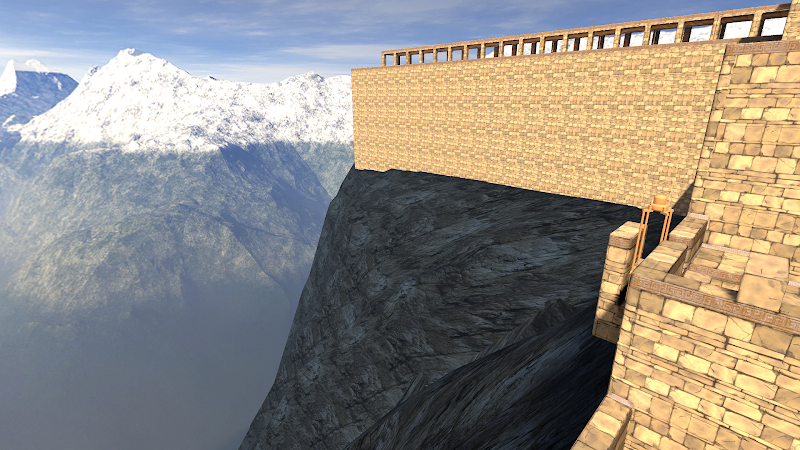

Cliffs model. The temple used to just float in the air; now it's grounded:

For a long time the game was full of placeholder models made of simple boxes and cylinders. There's still some of those left, but I've been working on replacing them all with proper models.

After briefly planning to work with contractors for 3D models, I decided to learn 3D modeling myself instead (and deal with the various challenges that come with it).

The models I need have highly specific requirements (they need to have very exact measurements and functionality to fit into the systems of the game) yet in the end they are quite simple models (man-made objects with no rigging).

With this combination it turned out that back-and-forth communication even with a very skilled artist took as much time as just doing the work myself. I'll still be working with artists for the game, just not for the simple 3d models I need.

Several of the models still have placeholder texturing. I have an idea for a good texture creation workflow for them, but it will take a little while to establish, so I'm postponing that while there's more pressing issues.

Intro section

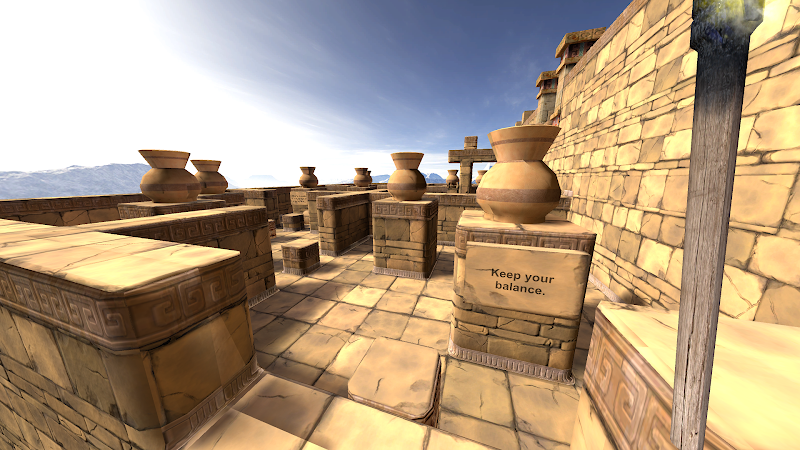

My goal is that Eye of the Temple should be a rather accessible game. You need a body able to walk and crouch, and not be too afraid of heights, but I want it simple enough to play that people who don't normally play computer games can get into it without problems.

This has largely been a success. Gamers or not, I normally just let people play without instructions, and they figure things out. My dad completed the whole thing in one hour-long session when he was visiting.

The game did throw people in at the deep end though, asking them right from the start to step between moving platforms four meters above the ground. Some people would hesitate enough to end up mis-timing their step and stumble, making the experience even more extreme right from the beginning.

To ease people a bit more in, I've worked on an intro section that starts out with only a 0.75 meter drop, and the first two platforms have no timing requirement. I have yet to get wide testing of this to see if it helps.

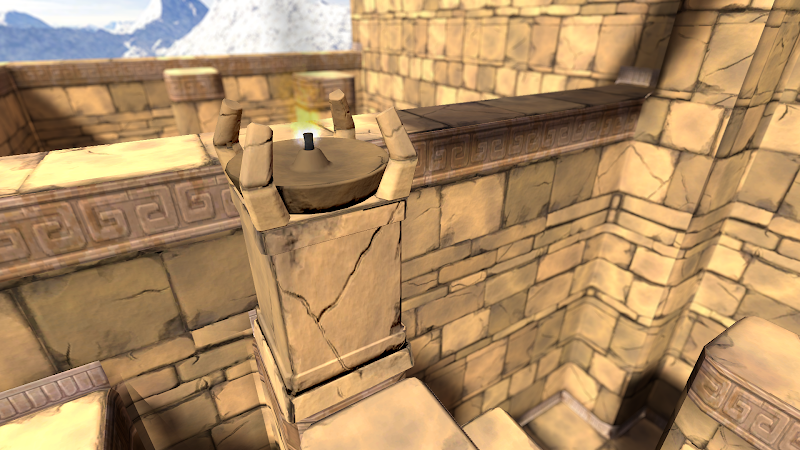

There is one particular problem I've toiled with for a while, which is to design a platform that bridges two spots in a compact manner. Why this is tricky relates to how the game lets you explore a large virtual space using just a small physical space.

Originally I had platforms rotating around a center axis, but that made some people motion sick who otherwise didn't have problems with the rest of the game.

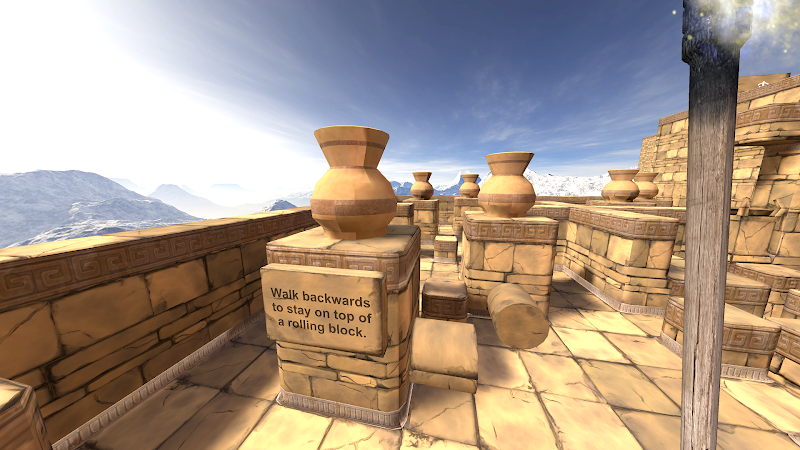

I tried various contraptions to replace it, but they were complicated and awkward to use. My latest idea is using just a barrel-like rolling block, which is nice in its simplicity, and also a fun little gimmick to balance on once you understand how to use it.

Figuring out what you're meant to do is easy to miss though, as I found out with the first tester trying it. I have some ideas for a subtle way to teach it, but that will take quite some time to implement. For now I settled for slapping a sign up that explains it.

Early testers online forum

There is no substitute for directly observing people playing a game, but this is impractical for me to do frequently when I also have a full-time job. I'm lucky if I get to do it two times a month.

In order to try to get faster feedback and shorter iteration cycles, I've now opened up for people to sign up online to be early testers of the game. If you have access to a Vive and would like to try out the game and provide detailed feedback based on your experience, please don't hesitate to join!

Sign up to provide feedback on early builds of Eye of the Temple

The quest for automatic smooth edges for 3d models

I'm currently learning simple 3D modeling so I can make some models for my game. I'm using Blender for modeling.

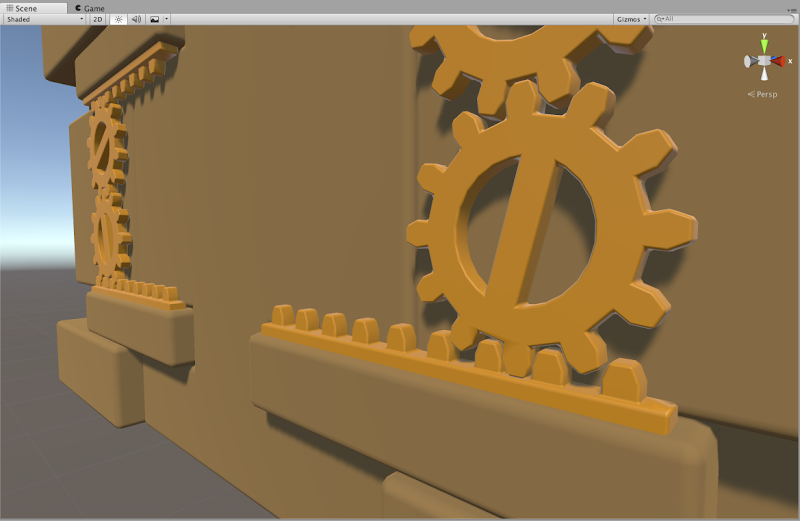

The models I need to make are fairly simple shapes depicting man-made objects made of stone and metal (though until I get it textured it will look more like plastic). There are a lot of flat surfaces.

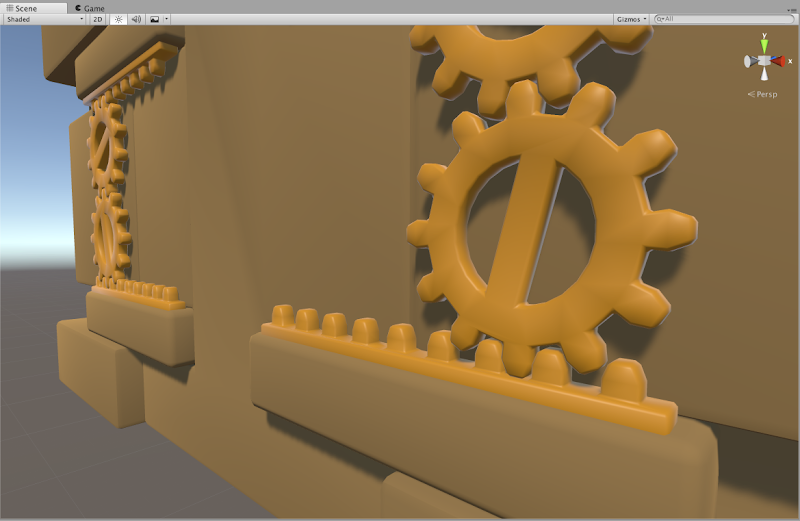

The end result I want is these simple shapes with flat surfaces - and smooth edges. In the real world, almost no objects have completely sharp edges, and so 3d models without smooth edges tend to look like they're made of paper, like this:

What I want instead is the same shapes but with smooth edges like this:

Here, some edges are very rounded, while others have just a little bit of smoothness in order to not look like paper. No edges here are actually completely sharp.

The two images above shows the end result I wanted. It turns out it was much harder to get there than I had expected! Here's the journey of how I got there.

How are smooth edges normally obtained? By a variety of methods. The Blender documentation page on the subject is a bit confusing, talking about many different things without clear separation and with inconsistent use of images.

Edge loops plus subdivision surface modifier

From my research I have gathered that a typical approach is to add edge loops near edges that should be smooth, and then use a Subdivision Surface modifier on the object. This is also mentioned on the documentation page above. This has several problems.

First of all, subdivision creates a lot of polygons which is not great for game use.

Second, adding edge loops is a manual process, and I'm looking for a fully automatic solution. It's important for me to have quick iteration times. To be able to fundamentally change the shape and then shortly after see the updated end result inside the game. For this reason I strongly prefer a non-destructive editing workflow. This means the that the parts that make up the model are kept as separate pieces and not "baked" into one model such that they can no longer be separated or manipulated individually.

Adding edge loops means adding a lot of complexity to the model just for the sake of getting smooth edges, which then makes the shape more cumbersome to make major changes to afterwards. Additionally, edge loops can't be added around edges resulting from procedures such as boolean subtraction (carving one object out of another) and similar, at least not without baking/applying the procedure, which is a destructive editing operation.

Edge loops and subdivision is not the way to go then.

Bevel modifier

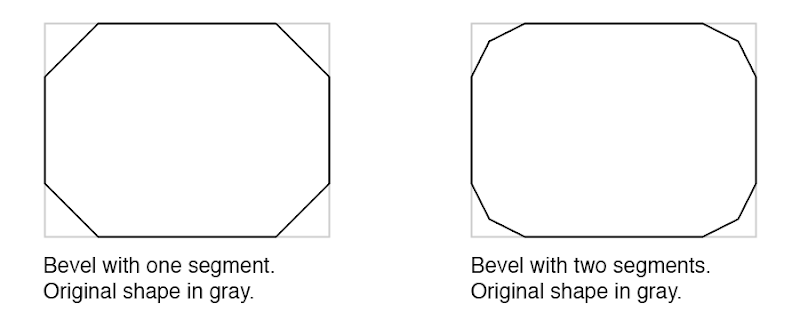

Some posts on the web suggests using a Bevel modifier on the object. This modifier can automatically add bevels of a specified thickness for all edges (or selectively if desired). The Bevel modifier in Blender does what I want in the sense that it's fully automatic and creates sensible geometry without superfluous polygons.

However, by itself the bevel either requires a lot of segments, which is not efficient for use in games (I'd want one to two segments only to keep the poly count low) or when fewer segments are used it creates a segmented look rather than smooth edges, as it can also be seen below.

Baking high-poly details into normal maps of low-poly object

Another common approach, especially for games, is to create both a high-poly and a low-poly version of the object. The high-poly one can have all the detail you want, so for example a bevel effect with tons of segments. The low-poly one is kept simple but has the appearance from the high-poly one baked into its normal maps.

This is of course a proven approach for game use, but it seems overly complicated to me for the simple things I want to achieve. Though I haven't tried it out in practice, I suspect it doesn't play well with a non-destructive workflow, and that it adds a lot of overhead and thus reduces iteration time.

Bevel and smooth shading

Going back to the bevel approach, what I really want is the geometry created by the Bevel modifier but with smooth shading. The problem is that smooth shading also makes the original flat surfaces appear curved.

Here is my model with bevel and smooth shading. The edges are smooth sure enough, but all the surfaces that were supposed to be flat are curvy too.

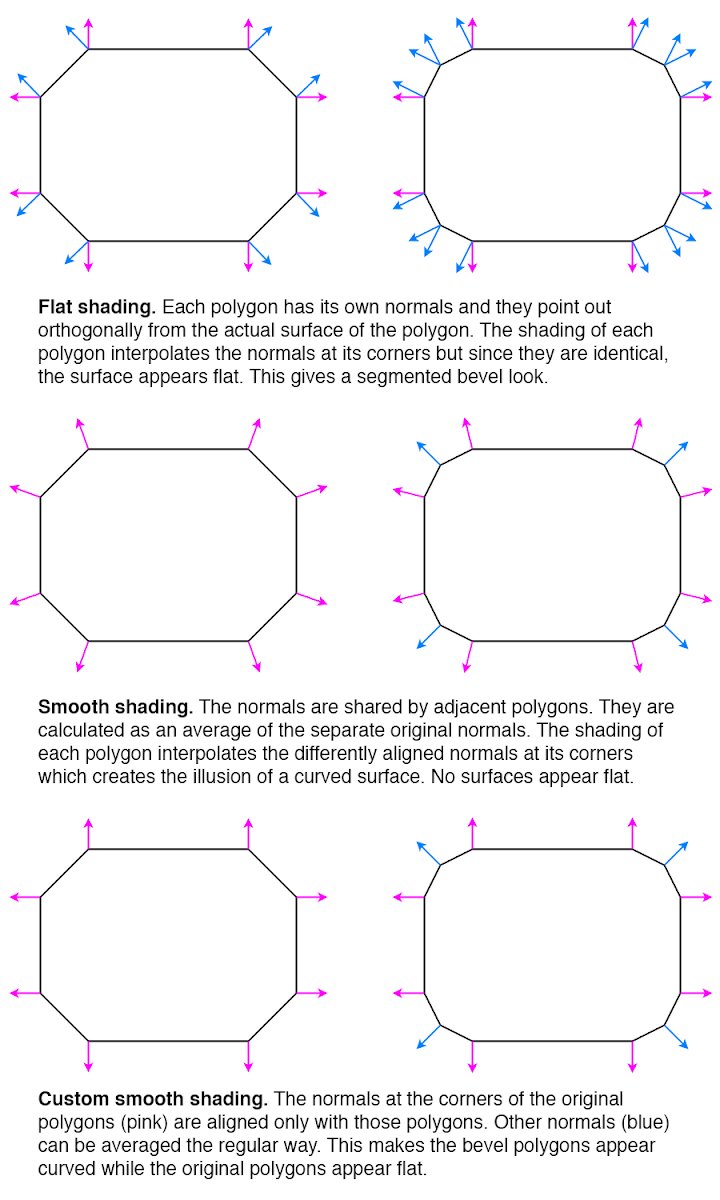

Smooth shading works by pretending the surface at each point is facing in a different direction than it actually does. For a given polygon, the faked direction is defined at each of its corners in the form of a normal. A normal is a vector that points out perpendicular to the surface. Only, we can modify normals to point in other directions for our faking purposes.

The way that smooth shading typically calculates normals makes all the surfaces appear curved. (There is typically a way to selectively make some surfaces flat, but then they will have sharp edges too.) The diagram below shows the normals for flat shading, for typical smooth shading, and for a third way that is what I would need for my smooth edges.

So how can the third way be achieved? I found a post that asks the same question essentially. The answers there don't really help. One incorrectly concludes that Blender's Auto Smooth feature gives the desired result - it actually doesn't but the lighting in the posted image is too poor to make it obvious. The other is the usual edge loop suggestion.

When I posted question myself requesting clarification on the issue, I was pointed to a Blender add-on called Blend4Web. It has a Normal Editing feature with a Face button that seems to be able to align the normals in the desired way - however as a manual workflow, not an automated process. I also found other forum threads discussing the technique.

Using a better smoothing technique

At this point I got the impression there was no way to get the smooth edges I wanted in an automated way inside of Blender, at least without changing the source code or writing my own add-on. Instead I considered an alternative strategy: Since I ultimately use the models in Unity, maybe I could fix the issue there instead.

In Unity I have no way of knowing which polygons are part of bevels and which ones are part of the original surfaces. But it's possible to take advantage of the fact that bevel polygons are usually much smaller.

There is a common technique called face weighted normals / area weighted normals (explained here) for calculating averaged smooth normals which is to weigh the contributing normals according to the surface areas of the faces (polygons) they belong to. This means that the curvature will be distributed mostly on small polygons, while larger polygons will be more flat (but still slightly curved).

From the discussions I've seen, there is general consensus that this usually produces better results than a simple average (here's one random thread about it). It sounds like Maya uses this technique by default since at least 2014, but smooth shading in Blender doesn't use it or support it (even though people have discussed it and made custom add-ons for it back in 2008), nor does the model importer in Unity (when it's set to recalculate normals).

Custom smoothing in Unity AssetPostprocessor

In Unity it's possible to write AssetPostprocessors that can modify imported objects as part of the import process. This can also be used for modifying an imported mesh. I figured I could use this to calculate the smooth normals in an alternative way that produces the results I want.

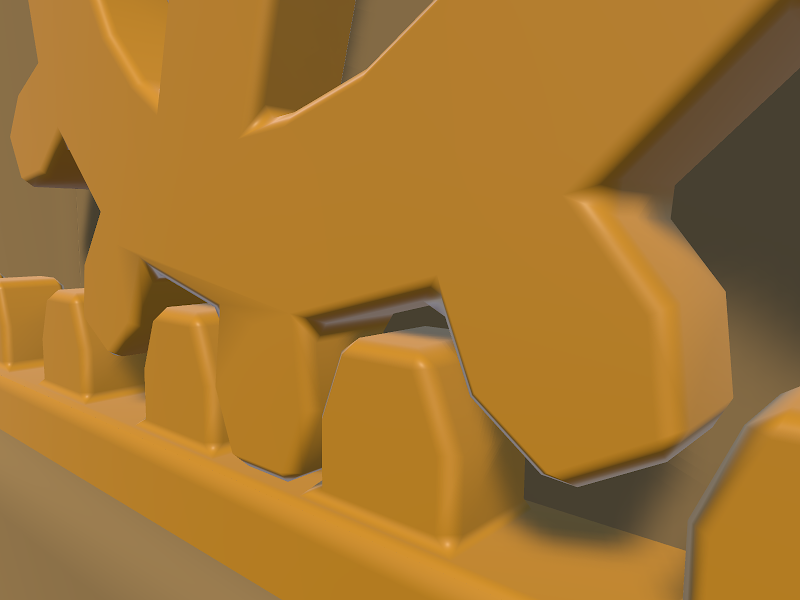

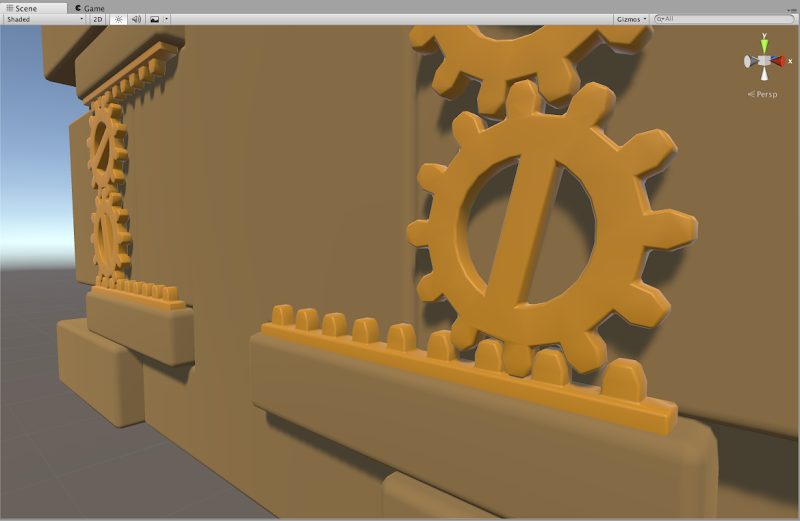

I started by implementing just area weighted normals. This technique still make the large faces slightly curved. Here is the result.

Honestly, the slight curvature on the large faces can be hard to spot here. Still, I figured I could improve upon it.

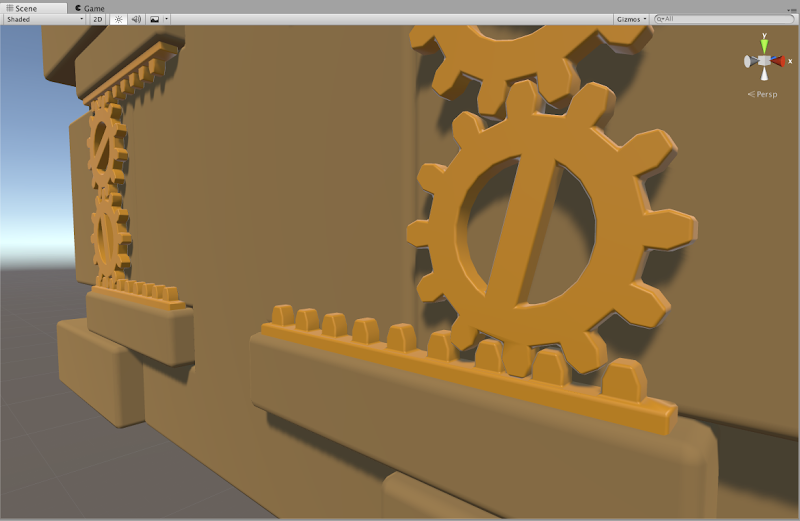

I also implemented a feature to let weights smaller than a certain threshold be ignored. For each averaged normal, all the contributing normals are collected in a set, and the largest weight is noted. Any weight smaller than a certain percentage of the largest weight can then be ignored and not included in the average. For my geometry, this worked very well and removed the remaining curvature from the large faces. Here is the final result again.

The code is available here as a GitHub Gist. Part of the code is derived from code by Charis Marangos, aka Zoodinger.

Future perspectives

The technique of aligning smooth normals on beveled models with the original (pre-bevel) faces seems to be well understood when you dig a bit, but poorly supported in software. I hope Blender and other 3D software one day will have a "smooth" option for their Bevel modifier which retains the outer-most normal undisturbed.

A simpler prospect is adding support for area weighted normals. This produces almost as good result for smooth edges, and is a much more widely applicable technique, not specific to bevels or smooth edges at all. That Blender, Unity and other 3D software that support calculating smooth normals do not include this as an option is even more mind-boggling, particularly given how trivial is it to implement. Luckily there workarounds for it in the form of AssetPostprocessors for Unity and custom add-ons for Blender.

If you do 3D modeling, how do you normally handle smooth edges? Are you happy with the workflows? Do some 3D software have great (automatic!) support for it out of the box?

Spin-off game: Whip Arena

I've spend the past few days at Exile (like a game jam but not a jam this year). I've envisioned having a whip in Eye of the Temple for a long time, and at Exile I began developing this whip mechanic.

In the main game, the whip is meant to be just one element in the gameplay, aiding in puzzles, like being able to grab and switch levers from a distance, and grabbing objects to pull towards you. However, for now I started out making a little self-contained game based just around the whip, so that I could focus on getting the feel right first.

At the end of Exile I had "whipped up" a little game I might call "Eye of the Temple - Whip Arena". Here's a video of the gameplay:

Implementing the whip physics was rather tricky. I've ended up with something that doesn't work quite like a real-world whip - you can't really make it do a crack in mid-air - but feels very responsive in its own way. The sound and haptics is based directly on the simulation, and I found it quite satisfying to use.

Now I'm wondering if I should take a little break from developing the full Eye of the Temple game and try to get this little arena game (which is much smaller in scope) finished and released first. I wonder if it's something people might be interested in? It definitely got positive reactions and feedback at Exile.

I'm thinking it would work well as an infinite game with high scores. There's not yet any fail condition though - I'm trying to think what might work well for that. I also need to implement some kind of bonuses and multipliers in the scoring probably.

As a side note - after spending several days prototyping and implementing this whip mechanic at Exile, I ended up with quite sore shoulders from whipping so much. ;)

If you have a Vive and would like to be an early tester of Whip Arena, let me know.

Announcing Eye of the Temple

I've been working on a VR game for the past months. It's called Eye of the Temple and it's a Vive game quite unlike any other.

Here's a pre-alpha trailer for it!

That's the first time I've made a trailer by the way. Quite challenging but also fun! It's got some placeholder models in it and it's made from playtest footage rather than clips made specifically for this trailer, but I tried to make the best of what I got.

Eye of the Temple is currently at an early stage in development.

Speaking of placeholder models, I'm just beginning now to look into working with contractors to have some nice art created for the game. If you know of any skilled concept artists or 3d modelers who'd like to design ancient contraptions for a game like this, let me know!

I previously worked on the game jam game Chrysalis Pyramid. Eye of the Temple is based on a similar core mechanic, but expands upon it in scope and variety, and is completely rewritten and redesigned from scratch. The goal is a commercial release in 2017.

Playtesting so far has been very promising and it's a lot of fun developing the game. Follow the development here and on Twitter, and let me know what you think!

Development of The Cluster put on hold

The Cluster is an exploration platformer game I've been developing in my spare time for some time. You can see all posts about it here.As of the time being, I've put development of The Cluster on hold indefinitely.

Burdens

After having worked on the game for more than ten years (!) I finally came to the conclusion that the overall design and constraints in the game is making it very hard for me to finish it, while also holding me back from trying out a lot of otherwise interesting ideas.

- The game is procedural and the same every time you play, which makes it super important to guarantee you can't get stuck.

- There is gravity and no ability to fly, which means you will get stuck unless the algorithms are carefully designed to make it impossible. It has to be more or less mathematically provable.

- The AI enemies can follow you practically everywhere, which means every obstacle needs to be coded to also be usable by AI.

- The world is constructed from a rather large-scale grid which makes things like large ramps and hills impossible (just a bit boring).

- The third-person perspective require art and animation resources for avatar and enemies.

- The side-scrolling format makes it hard to wordlessly attract the player to interesting things in the far distance, because only the immediate environment can be seen. This creates more of a reliance on maps and narratives to explain the reasoning for seeking out those far away things, and that plays against the procedural nature of the game.

Now, none of those things are impossible to overcome, and I did have a playable demo at the end of 2015 that worked under all of those constraints. But the game is not yet nearly varied enough, and every new feature is very tough to pull off under the burden of all those constraints.

Focusing on my strengths

I've switched focus to working on projects that I hope both play more to my strengths, and should be possible to pull off in a shorter time frame.

- First-person perspective without jumping, so no fiddly physics.

- No humanoid characters, which decreases the demands for art, animation and "behaviors".

- No ambition of a story (some of my favorite games don't have one anyway).

This frees me up to focus more exclusively on making interesting environments, which is what I'm most passionate about.The good news is that the time has not been entirely wasted. For one, I've learned a LOT. And for another I've developed several pieces of substantive tech that are fully reusable in my future projects.Speaking of future projects, I'm working on a few already.One is The Big Forest, though I'm not actively working on it right now. The focus here is to create nice, interesting and varied environments with very simple gameplay on top. I want it to me my own favorite walking simulator. In its current early stage it's already one of the best virtual forest experiences I've had.The project I'm actively working on is a VR game for Vive that I've tweeted a little bit about and will soon announce properly here on the blog.Stay tuned!

The Big Forest

I've been continuing my work on the procedural terrain project I wrote about here. I added grass, trees and footstep sounds (crucial!) and it's beginning to really come together as a nice forest to spend some time in.

I made a video of it here. Enjoy!

If you want to learn more about it, have a look at this thread on the procedural generation subreddit.

Working with puzzle design through state space visualization

In the beginning of 2014 I was interested in procedurally generating computer games puzzles with typical elements like toggles, gates that can be triggered to open, boxes or boulders that can be moved onto pressure plates, etc. Many games contain elements like these and I took inspiration in particular from the game Lara Croft and the Guardian of Light.

To better understand these puzzles, and understand what makes a puzzle interesting or boring, I started creating a tool for analyzing and visualizing the state space of the puzzle. In the Procedural Content Generation mailing list I discussed the approach here.I've worked on it on and off since, and while I still don't have an algorithm for procedurally generating the puzzles, the tool itself is interesting in its own right. It's called PuzzleGraph and I've just released it for free.

You can setup and connect puzzle elements like gates, toggles, pressure plates and boulders, and see the state space of the puzzle visualized, including solution paths, dead ends and fail states.

If you make some puzzles with PuzzleGraph, I'd love to see them!

The best demonstration is this video I made. If you already saw the video, skip down a bit for some new info and announcements.

Creating natural paths on terrains using pathfinding

Pathfinding is not just for finding paths for AI agents/NPCs and similar. It’s also for procedurally creating paths.

While working on path creation for a procedural terrain, I had the problem that the generated paths would keep having too steep sections, like this:

It was too steep and also didn’t look natural at all. I kept increasing the cost multiplier for slopes, but it didn’t help.

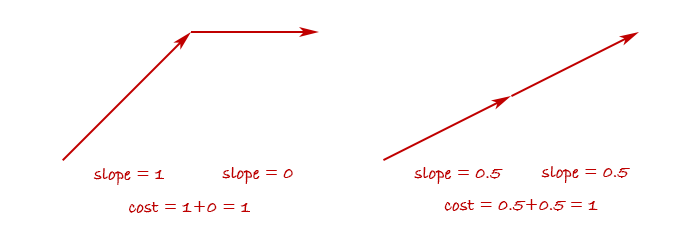

Then it hit me: My function just penalized the vertical distance, but it didn’t make any difference if this vertical distance came gradually or all at once.

So in fact, my cost function wasn’t trying to avoid steepness - rather it was trying to make a path where the altitude changes monotonically. It would do everything it could to avoid the path first going up and then down again.

But I didn’t care if the path went a little up and down, as long as it wasn’t too steep at any point. To achieve this behavior, I needed to penalize steep slopes more than linearly, for example by squaring it.

This has the effect of penalizing abrupt changes in altitude and reward gradual changes.

And sure enough, after the change the generated paths avoided steepness and looked much more like what humans would create.

Tweaking pathfinding cost functions is one of those odd little things that I really enjoy. Seeing a little change in a formula imbue a generated creation with what could be mistaken for human design is really fascinating and fun!

Further notes

The observation-turned-blog-post in its original form ended there, but I've compiled some further notes for those who want to dig into the details.

Pathfinding method

Let me tell about the method I use for the pathfinding, since I was asked about this. I won't do an introduction to pathfinding in general here but assume understanding of the principles of performing pathfinding using an algorithm like A* or similar on a graph of nodes connected by edges.

Such a graph can be represented in many ways. Sometimes it's a data structure with explicit data representing all the nodes and edges and how they're connected. Other times it can just be a grid (like a 2D array) and each cell is implicitly connected to the neighboring cells - maybe the 4 or 8 neighbors, depending on whether diagonal connections are used.

It doesn't really matter, and a pathfinding algorithm can typically easily be modified to work with one or the other. Apart from giving it a start node and goal node, the important part is that you can tell the algorithm:

- Which other nodes are a node connected to?

- What is the real or estimated cost of getting from one node to another?

You might have data for this stored in advance or you might calculate the answers on the fly when asked.

For the terrain pathfinding I do the latter. In fact, there is neither an explicit graph, nor any 2D array. There is no data structure at all for the pathfinding to happen inside. Instead, it's all implicit, based solely on coordinates. I tell the pathfinder what the start and end coordinates are, and there's a function for getting "neighbor coordinates" to a given coordinate. There's also a function for getting the cost of getting from one coordinate to another, as you saw earlier.

This may sound completely free-form and magical at first, but there still is a structure that must be adhered to. You must imagine a grid structure and ensure the coordinates always fall within this grid. In my case it's a plain quadratic cell grid where each cell has a size of 5. So the start coordinate, goal coordinate, and every provided neighbor coordinate must always have x and y values that are multiples of 5. You also shouldn't use floating-point numbers for this, since they can produce floating point precision errors, and even the slightest imprecision can cause the pathfinding to completely fail.

I wanted my paths to be able to have a natural, non-jagged look, so I wanted edges to come in 16 directions instead of the basic 8. The 8 additional directions are achieved by going two cells out and one to the side. This provided me with an interesting choice of whether the 8 other directions should also go two cells out, or just one.

In theory the second option can be weird, since if you need to move just one cell the path have to first side-step by two cells. But in practice both seems to work fine. The first images in this post were made with the first option but I later decided to use the second to avoid the path having very small-scale zig-zags.

Effects of different parameter values

I got asked on the proceduralgeneration sub-reddit:

How rapidly does the path change as you increase the power from 1.0 to 2.0? What happens if you go past 2.0? Does the path eventually have to spiral around the mountain or something?

I had actually been content just using the values I had found which seemed to work well enough, but now that I'd been asked, of course I wanted to find the answers too! I tried doing the pathfinding with the power values 1.5, 2.0 and 3.0 and with the multiplier values 1, 2, 3, 4, 5, 6, 7, 10, 15, 20, and 25. I had moved the multiplier inside the power function since the original version of the code, so those multipliers are multiplied onto the steepness before the resulting value is raised to the given power. Here's a table of the results.

Some notes on the result.

Overall the common theme is that the results range from straight and boring to wildly tortuous. At the extreme end, the path begins to create loops - something that should be impossible with path-finding. However, the edges I use in the path-finding can cross each other. This means that they can appear to loop even though they never pass through the same points from the perspective of the path-finding. They only begin to do this once the path-finding is so extremely sensitive to tiny changes in altitude that the small difference in altitude between one of two crossing edges is enough for it to exploit it to loop around. I should say that I only sample the altitude at the end-points of each edge, which appears to be fully sufficient except in the extreme cases like this.

Note that there are very similar occurrences across the different power values. For example, multiplier 10 at power 1.5 looks just like multiplier 6 at power 3.0, and multiplier 7 at power 2.0 looks just like multiplier 5 at power 3.0. Does this mean that any result you can get with one power value, you can also get with another? That there exists a transformation that means they're mathematically equivalent? No, I don't believe so. It feels like there's subtle differences. For example, the progression of paths with power value 3 begins to do loops before it begins to take a major detour, while for power value 2, the loops only start happening after it has begun doing a large detour. The differences are very subtle though and hard to put the finger on exactly.

One thing that's tempting to look for is qualitative differences, such as some paths doing many small zig-zags and others doing larger swoops. However, I think that's a red herring. The algorithms doesn't measure sharpness of turns or frequency of turns in any way and shouldn't be able to tell one from the other. I think that the seeming qualitative differences are thus up to unpredictable aspects of how the path-finding interacts with the terrain height function that sometimes just happen to produce one result or the other. To answer it in terms of the original question: If the terrain was perfectly conical, a path from the base to the top might equally well form a spiral, a zig-zag pattern, or any other combination of left-winding and right-winding sections.

My own pragmatic takeaway from this is that sticking with just a power value of 2 seems fine and I'd then use multiplier values between 3 and 15 depending on how straight or tortuous I want the path to be.

Perspectives

These generated paths were a proof-of-concept for a new project I'm working on and I'm sure I'll learn more as I go along. For example, already I'm learning some things about approaches for flattening the terrain around the paths which I may share at a later point when I'm a bit more sure of my findings. For now, I hope you found this useful!

2024 update: Code available

I've now released a framework called LayerProcGen as open source, and one of the sample scenes generates natural paths using the technique described in this article. So a fully functional implementation is now available for study and use.

The Cluster 2015 Retrospective

The Cluster is an exploration game I've been developing in my spare time for some time. You can see all posts about it here. It looks like I didn't write any posts about it for all of 2015, yet I've been far from idle.

By the end of 2014 I had done some ground work for fleshing out the structure of the world regions, but the game still didn't provide visible purpose and direction for the player.

My goal for 2015 was to get The Cluster in a state where it worked as a real game and I could hand it over to people to play it without needing instructions from me. Did The Cluster reach this goal in 2015? Yes and no.

I made a big to-do list with all the items needed to be done for this to work. (As always, the list was revised continuously.) I did manage to implement all these things so that the game in theory should be meaningfully playable. I consider that in itself a success and big milestone.

However, I performed a few play tests in the fall, and it revealed some issues. This was not really unexpected. I've developed games and play tested them before, and it always reveals issues and shows that things that were designed to be clearly understandable are not necessarily so. I don't consider this a failure as such - when I decided on my goal for 2015 I didn't make room for extensive iteration based on play test findings. I did manage to address some of the issues already - others will need to be addressed in 2016.

On the plus side, several players I had playing the game had a good time with it once they got into it with a little bit of help from me. In two instances they continued playing for much longer than I would have expected, and in one instance a play-tester completed clearing an entire region, which takes several hours. I think only a minority of players can get that engaged with the game in its current state, but it was still highly encouraging to see.

Essentials

Some boring but important stuff just had to be done. A main menu. A pause menu. Fading to black during loading and showing a progress bar. (I found out that estimating progress for procedural generation can be surprisingly tricky and involved. I now have a lot more understanding for unreliable progress bars in general.) Also, upgrading to Unity 5 and fixing some shaders etc.

Enemy combat

I had AI path-finding working long ago, but never wrapped up the AIs into fully functional enemies. In 2015 I implemented enemy bases in the world to give the enemies a place to spawn from and patrol around.

Enemy combat also entailed implementing health systems for player and enemies (with time-based healing for the player), implementing player death and reloading of state, and having the enemies be destroyed when the player enters certain safe zones.

For the combat I decided to return to a combat approach I used long ago where both player and enemies can hold only one piece of ammo at a time (a firestone). Once thrown, player or enemies have to look for a new firestone to pick up before they can attack again. This facilitates a gameplay alternating between attacking and evading. I noticed that the game Feist uses a similar approach (though the old version of my game that used this approach is much older than Feist).

I decided to begin to use behaviour trees for the high-level control of enemies. This included patrolling between points by default, spotting the player on sight, pursuing the player, but look for firestones on the ground to use as ammo if not already carrying one. Then returning to patrolling if having lost sight of the player for too long. Even AI logic as simple as this turned out to have quite some complexities and edge cases to handle.

Conveying the world structure

The other big task on my list after enemy combat was making the world structure comprehensible and functional to the player.

Worlds in The Cluster are divided into large regions. One region has a central village and multiple shrines. All of those function as safe zones that instantly destroys enemies when entered and saves the progress. In addition, a region has multiple artefact locations that are initially unknown and must be found and activated by the player. This basic structure was already in place by the end of 2014, but not yet communicated to the player in any way.

I've done my share of game design but I'm still not super experienced as a game designer. It took a lot of pondering and iteration to figure out how to effectively communicate everything that's needed to the player, and even then it's still far from perfect. In the end I've used several different ways to communicate the world structure that work in conjunction:

- Supporting it through the game mechanics.

- In-world as part of how the world looks.

- In meta communication, such as a map screen.

- Through text explanations / dialogue.

Supporting world structure through game mechanics

There are a number of game mechanics that are designed to support the world structure.

The artefacts that are hidden around the region can be discovered by chance by exploring randomly, but this can take quite a while and requires self-direction and determination that not all players have. To provide more of a direction, I introduced a mechanic that the shrines can reveal the approximate location of the nearest undiscovered artefact. This gives the player a smaller area to go towards and then search within.

In order to sustain most of the mystery for as long as possible, a new approximate artefact location can't be revealed until the existing one has been found. This also helps giving the player a single clear goal, they are still free to explore elsewhere if desired.

Once an artefact is found, a shortcut in the form of a travel tube can be used to quickly get back to a more central place in the region. Initially the tube exit would be close to a shrine, but the player might subsequently miss the shrine and be aimless about where to go next. Based on early play tests, I changed the tubes to lead directly back to a shrine. This way the player can immediately choose to have a new approximate artefact location revealed.

World structure communicated in-world

I got the idea to create in-world road signs that point towards nearby locations in the region, such as the village and the various shrines. This both concretely provides directions for the player and increases immersion.

Particularly for a procedurally generated world, the signage can also help reinforce the notion that there is structure and reason to the world as opposed to it being entirely random as can be a preconception about procedurally generated worlds.

This entailed generating names for the locations and figuring out which structures to store them in. The signs can point to locations which are far outside the range of the world that is currently loaded at the max planning level. As such, the names of locations need to be generated as part of the overall region planning rather than as part of the more detailed but shorter range planning of individual places.

Next, I needed to make key locations look their part. I'm not a modeller, but I created some simple placeholder models and structures which at least can give the idea of a village and shrines.

Improved map screen

I had created a detailed map for the game long ago, but that didn't effectively communicate the larger overall structure of a region.

To remedy this I created a new map that shows the region structure. I've gone a bit back and forth between how the two maps integrate, but eventually I've concluded that combining them in one view produces too much confusing simultaneous information, so they are now mostly separate, with the map screen transitioning between the two as the player zooms in or out.

Here's examples of the detail-map and the region-map:

Apart from the map itself, I also added icons to the map to indicate the various locations as well as the position of the player. Certain locations in the game can be known but not yet discovered. This mean the approximate location is known but not the exact position. These locations are marked with a question-mark in the icon and a dotted circle around it to indicate the area in which to search for the location.

Part of the work was also to keep track of discovered locations in the save system.

Dialogue system

Communicating structure and purpose through in-world signage and the map screen was not sufficient, so I started implementing a dialogue system in order to let characters in the game be able to explain things.

This too proved to be quite involved. Besides the system to just display text on screen in a nice way, there also needed to be a whole supporting system for controlling which dialogues should be shown where, depending on which kind of world state.

This can be complex enough for a manually designed game. For a procedural game, it's an additional concern how to design the code to place one-off dialogue triggers in among procedural algorithms that are used to generate hundreds of different places, without the code becoming cluttered in undesirable ways.

What's next?

I hope to get The Cluster into a state where it's fully playable without any instructions in the first quarter of 2016.

After that I want to expand on the gameplay to make it more engaging and more varied.

As part of that I anticipate that I may need to revert the graphics in the game to a simpler look for a while. I've had a certain satisfaction from developing the gameplay and graphics of the game in parallel, since having something nice to look at is very satisfying to accomplish. However, now that I'll need to ramp up rapid development of more gameplay elements, having to make new gameplay gizmos match the same level of graphics will slow down the iteration process. For that reason I'll probably make the game have more of a prototype look for a while, where I can develop new gameplay with little or no time spent on graphics and looks.

Nevertheless, even with a much simpler look, I still want to retain some level of atmosphere, since one of the things I want to implement is more variety in moods. This is in extension to the game jam project A Study in Composition I worked on this year.

If you are interested in being a play tester for early builds of The Cluster, let me know. I can't say when I will start the next round of play testing, but I'm building up a list of people I can contact once the time is right. Play testing may involve talking and screen-sharing over e.g. Skype since I'll need to be able to observe the play session.

If you want to follow the development of the Cluster you can follow The Cluster on Twitter or follow myself.